As the Internet of Things (IoT) is growing, so does the attention to edge computing and intelligence. ‘We’re currently shifting to this new computing paradigm’, explains TU Delft tenured Associate Professor Aaron Ding. Already in the foreday of edge computing, Ding’s research focuses on IoT and the power of distributed computing resources that are deployed at the network edge.

Smart speakers, self-driving cars, healthcare robots – Artificial Intelligence, or AI for short, is playing an increasingly significant role in our lives. The presence of AI will only continue to grow. Therefore, it is crucial to ensure this development proceeds carefully. Aaron Ding explains that much AI technology is managed through the cloud. “The cloud processes information exchanged between users and AI systems. This data is stored in large data centres operated by tech and telecom companies, which use it to train their models and enhance their products and services.”

The cloud offers vast storage advantages, but it also has disadvantages and limitations, Ding says. “For example, data must be transported over long distances. This consumes a lot of energy and brings safety and reliability issues. Consider self-driving cars, for instance. If the Internet connection between the vehicle and the cloud experiences disruptions, it could result in the car receiving critical traffic information too late, potentially leading to catastrophic consequences. Milliseconds can make the difference between life and death.”

Edge AI: Data processing close to the user

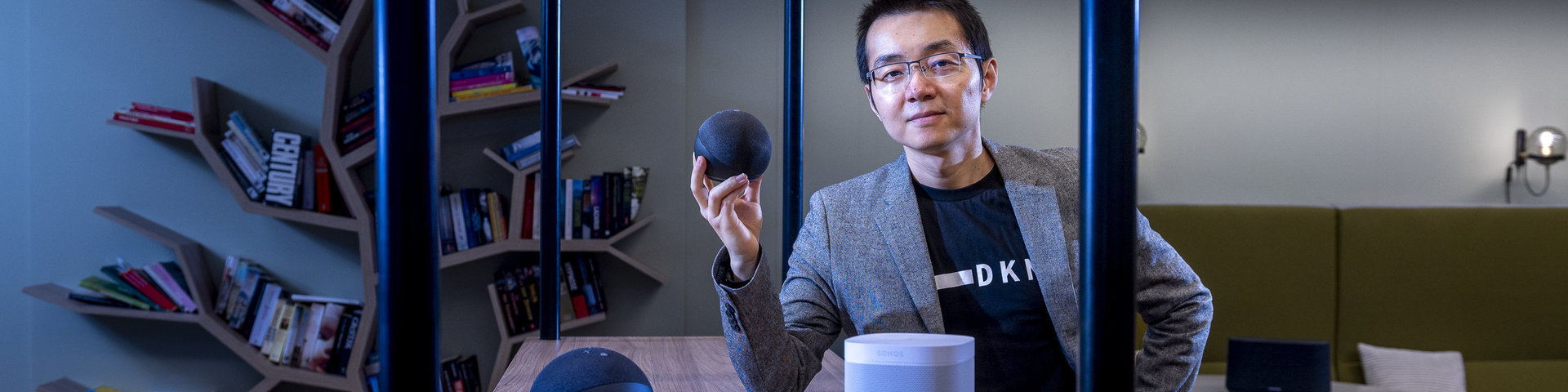

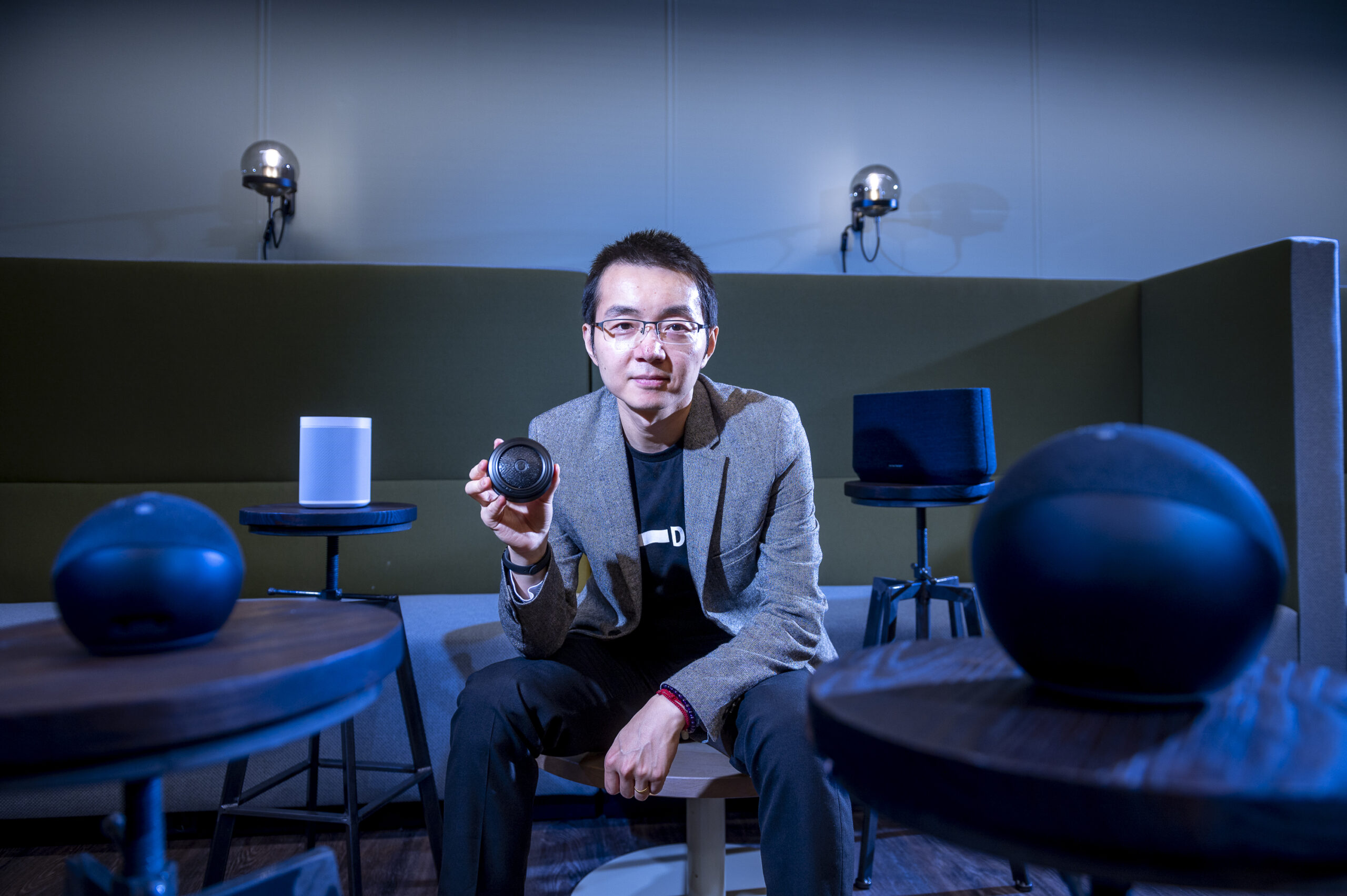

In such cases, Edge AI, the field that Ding is researching, can offer a solution. “Edge AI involves bringing data processing closer to the user. This can be done, for example, with a chip in a smart speaker or with smart sensing units in a traffic light. The device collects and processes data on-site and communicates it back to the user. Since not everything is transmitted through the cloud, the risk of delays or communication issues is reduced. Additionally, it eliminates the need to transmit terabytes of data, resulting in significant energy savings.”

Enhancing privacy protection

Another aspect where Edge AI provides a solution is in protecting privacy. When users communicate with an AI device, personal information is often exchanged, Ding explains. “This data can be highly confidential, such as medical information stored on smart speakers used in home healthcare. Through the cloud, this information may end up with companies, giving them access to sensitive data. With Edge AI, you can ensure that only the speaker stores confidential information, and the information relevant for improving models is shared anonymously with the cloud.”

Striking the right balance

Ding is researching how far data processing can be brought back into the device itself. A chip or hard drive has significantly less storage and processing capacity than the cloud, which means making compromises, Ding notes. “On the one hand, Edge AI can lead to energy savings and reduced risks in terms of safety and privacy. However, it also requires sacrificing quality and performance. Thus, it’s a matter of research in finding the right balance.”

One of the risks that arises when “compressing” AI models onto a chip, for example, is the presence of biases in data processing. Ding explains, “AI developers often assume a middle-aged, Western, white man as the user, as research shows. This can result in biases in ‘compressed’ models/algorithms. For example, a self-driving car using such algorithms might not recognize individuals as objects under certain conditions, such as when it’s the dark, which is highly dangerous. It’s essential to ensure greater inclusivity in Edge AI.”

Design guidelines for Edge AI

Ding incorporates improvements for Edge AI, such as inclusivity, into a series of guidelines he calls “design patterns for Edge AI.” The goal is to make companies and developers more aware of biases, fairness, and privacy issues and how to handle them carefully. He collaborates with the German institute Fraunhofer FOKUS, one of the leading players in Europe for guidelines on open communication systems and artificial intelligence.

Bridging the gap between the cloud and IoT

Despite the functionality Edge AI can take over from the cloud, Ding believes it’s a misconception to think it will ultimately render the cloud obsolete. “Edge AI should be seen as a way to seamlessly connect the cloud and the smart devices and systems we use in our daily lives, often referred to as the Internet of Things (IoT). It acts as a bridge. Edge AI can provide solutions to problems we encounter with the cloud and that current IoT devices cannot fully address.”

I aim to create smart AI infrastructure systems that are sustainable and prioritise human values.

Collaboration

According to Ding, the Faculty of Technology, Policy, and Management (TPM) at TU Delft is an ideal place to address Edge AI issues. “This is primarily due to the multidisciplinary nature of the faculty. I collaborate with colleagues in fields such as energy, transportation planning, business modelling, and economics. To achieve effective co-design, you need a broad playing field. Industry partners, such as tech developers, car producers, and telecom companies, are also essential. They have a wealth of technological knowledge but need to know what to consider now and in the future.”

Ding has been active in the technology and AI research field for over sixteen years. He has worked around the world, gaining insights into both the scientific and corporate aspects. From Nokia to BMW and from the universities of Cambridge and Columbia to those of ETH Zürich, Helsinki and Delft, he has explored various aspects of technology and AI. Ding explains, “In the first eight years, I mainly focused on the technological side, but I became increasingly aware of AI’s societal impact. The resource crisis caused by the growing demand for chips and the climate crisis made me want to focus more on the societal aspect of tech. I aim to create smart AI infrastructure systems that are sustainable and prioritise human values. That’s when I shifted toward human-centric AI services.”

Harvesting AI’s potential broadly

Ultimately, Ding hopes that we can reap the benefits of AI’s potential across a wide spectrum. “To achieve this, AI must be sustainable, safe, user-friendly, and trustworthy. It is partly my responsibility to determine what is necessary for this. We also need to consider the problems we may face in the future and how to prepare for them. Solving existing problems also creates new ones. What are they, and how do we deal with them? The constant evolution of AI such as Generative AI and ultra-low power machine learning makes this field so fascinating.”